(Video: Dot, Hot, and Lot in “Ballet for dark suits and greasy wash water”)

I’m back from an intense week at ICASSP 2015. As I mentioned in my previous post, one of my talks was about our proposal to use mobile robots to collect large and rich audio datasets at low cost. We call these robots MICbots, because we use them to record audio data with, you guessed it, microphones, and also because they are a joint effort by MERL, Inria and Columbia (MIC! how convenient). Our idea is to have several of these robots recreate a cocktail party-like scenario, with each robot outputting pre-recorded speech signals from existing automatic speech recognition (ASR) datasets through a loudspeaker, and simultaneously recording through a microphone array. This has many advantages:

- we know each of the speech signals that are played;

- these speech signals have already been annotated for ASR (word content and alignment information);

- we can know the “speaker” location;

- the mixture is acoustically realistic, as it is physically done in a real room;

- the sources are moving;

- we can let the robots run while we’re away, attending real cocktail parties;

- we can envision transporting a particular experimental setup into different environments.

The first point, about the availability of ground truth speech signals, is crucial to be able to measure source separation performance, as well as when using state-of-the-art methods relying on discriminative training. These methods typically try to learn a mapping from noisy signals to clean signals, and thus need parallel pairs of training data.

At the time of submission, we only had a concept to propose, backed up with a study of existing robust speech processing datasets (following the extended overview that Emmanuel and I put together on the RoSP wiki and in a technical report) that showed the need for a new way to collect data. But we really didn’t want the MICbots to become vaporware, so we spent a couple (very fun) weeks actually building them ahead of the conference. Striving for simplicity and low-cost, we relied mostly on off-the-shelf components:

The moving part of the robot is the Create 2 by iRobot, which is basically a refurbished Roomba without some of the vacuuming parts, intended for use in research and education (I hear that they are unfortunately only available in the US for now; an alternative, other than simply using a Roomba, is the Kobuki/Turtlebot platform). The loudspeaker is a Jabra 410 USB speaker popular for Skype and other VoIP applications. The microphone array is the unbelievably cheap (in the US) PlayStation Eye: for $8 (surprisingly, it’s $25 in Japan…), you get a high-FPS camera together with a linear 4 channel microphone array! To control the robot, play the sounds and record, we went with a Raspberry Pi model B+, which we connect to remotely through a wifi dongle. Of course, we ordered the Pi’s the day before the new and much more powerful Raspberry Pi 2 was announced, but well, the B+ is enough for now, and at $35 it’s not too painful to upgrade. With the Jabra speaker, 2 PS Eyes and the wifi dongle connected, the Pi draws around 500 mAh… The Pi (and all the connected devices through it) gets its power from 6 D cells which are connected through a buck converter to downconvert to 5V. We considered tapping the Create 2’s power, so that the experiments could be fully autonomous with the Create 2 going back to its base to recharge, but the current setup is simpler and can easily run overnight, so we could always change the batteries after a night’s worth of data collection.

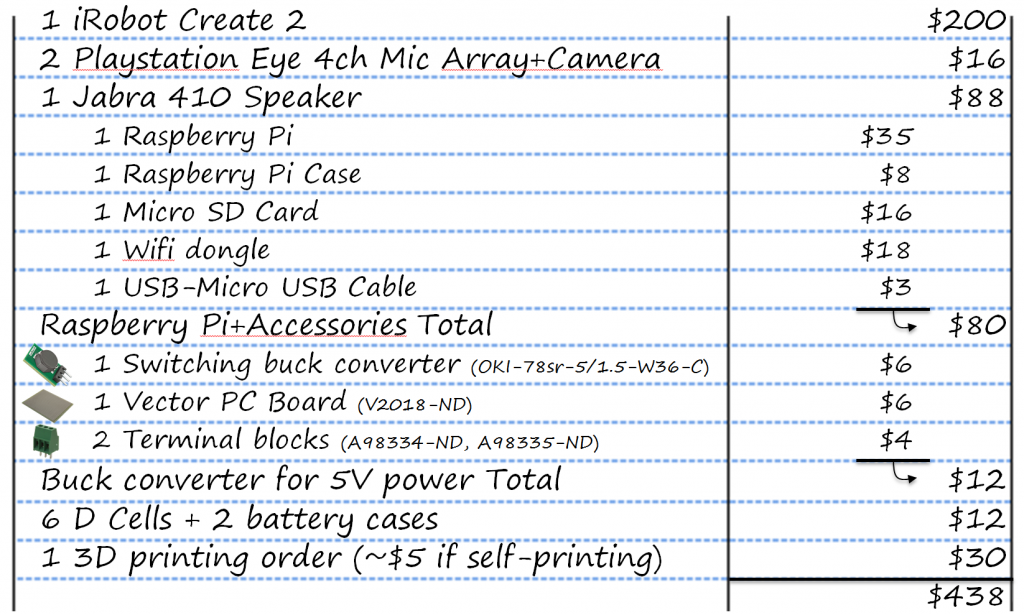

Altogether, we were able to build them for slightly more than $400 per robot. Here is the budget for one robot:

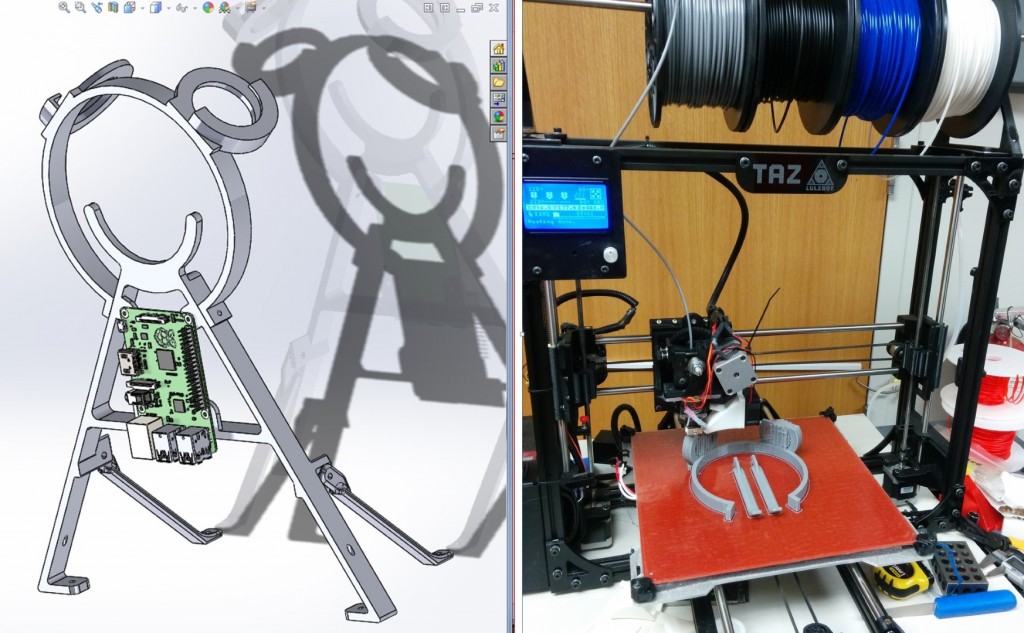

To bring all the parts together, we designed and 3D-printed a mounting frame (we plan to release the CAD files in the future, once we settle on a final design):

Once assembled, we get this:

Cute, aren’t they?

In the video above, the robots are following a simple random walk, stopping and turning a random angle every time one of their (many) obstacle sensors is triggered. They are controlled by the Raspberry Pi through their serial port using Python code I derived from PyRobot by Damon Kohler. The interface code had to be modified for the Create 2 as some changes were made by iRobot between the Create 1 and the Create 2 (see the Create 2 Open Interface Specification), and is available for download: PyRobot 2. Note that this is only the code that allows one to communicate with and access the basic functions of the Create 2. I plan on releasing the MICbot code built on top of it as well once it is in a more final shape.

We still have some work before we can release an actual dataset. We need first and foremost to settle on a recording protocol: where should the robots be when they “speak”, should they move while speaking, what should be the timing of the utterances by each robot, etc. We also need to work on a few issues, mainly how to effectively align all the audio streams and the references, how to account for the loudspeaker channel, and how to accurately measure the speaker locations.

On those questions and everything else, we welcome suggestions and comments ( ). For future updates, please check my MICbots page.

). For future updates, please check my MICbots page.

Many thanks to my colleague John Barnwell for his tremendous help designing and building the robots.